I recently dug through OpenClaw founder Peter Steinberger's 2025-2026 blog posts and got thoroughly hooked—this guy isn't just writing technical shares; he's living a pragmatic, anti-motivational personal operating system around "using AI agents to write code." His take on AI agents hits hard: not the kind of colleague who whiteboards grand visions or deflects blame in meetings, but a silent, gritty workhorse who grumbles at you occasionally yet never drops the ball when it matters.

What struck me most is his brutally honest, almost offensive one-liner: "These days I don’t read much code anymore. I watch the stream and sometimes look at key parts, but I gotta be honest—most code I don’t read." To him, code isn’t a document to dissect line-by-line anymore; it’s more like a rushing river. You don’t need to jump in and swim with the current—just stand on the bank and toss the occasional stone to steer its direction. He also drops this vivid metaphor: "Building software is like walking up a mountain. You don’t go straight up; you circle around it, take turns, sometimes veer off-path and have to backtrack a bit. It’s imperfect, but eventually you get to where you need to be." Software development isn’t about a flawless straight-line climb—it’s about circling the mountain, meandering, correcting course when lost, and stumbling forward until you reach the summit.

Now, let’s unpack this highly actionable methodology through the lens of his blog’s hilarious yet piercing complaining, which might make you laugh out loud—but trust me, the substance is real.

What’s wilder is his "pathological trust" in models: "If codex comes back and hasn’t solved it in one shot, I already get suspicious." When an AI fails a complex task, his first thought isn’t "Did I explain it poorly?" but "Is the prompt bad? Context messy? Model having an off day?"—a mindset as blunt as it is refreshing.

He also busts an industry myth: "Most software does not require hard thinking. Most apps shove data from one form to another, maybe store it somewhere, then show it to the user." Software, he says, is mostly "data shuffling + display," not rocket science—AI can handle it. He later adds a sting: "The amount of software I can create is now mostly limited by inference time and hard thinking." His output now depends on AI speed and his own rare bursts of deep thought, not typing speed.

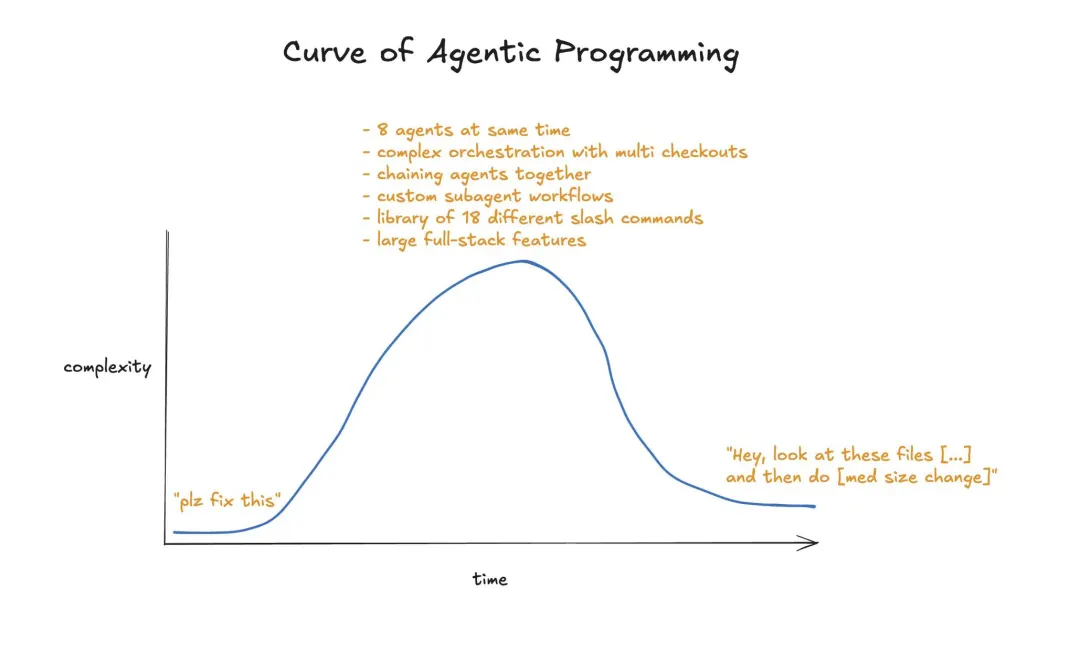

The funniest part? He self-identifies as a "Claudoholic", admitting he’s hooked on the dopamine hit of "just one more prompt"—like a gambler glued to a slot machine. "I’m that person that drags in a clipped image of some UI component with ‘fix padding’ or ‘redesign’"—snapping a UI screenshot, tossing it to AI, and saying "fix this" in one line. Yet in Just One More Prompt, he questions whether this is hyper-productivity or a new form of workaholism—even burnout in disguise. His snark shines through too, like when Claude Code finally fixed its notorious flicker bug: "Hell froze over. Anthropic fixed Claude Code’s signature flicker in their latest update (2.0.72)"—equal parts shock, relief, and dark humor.

Peter’s first move in any problem-solving scenario is always to gauge the "blast radius"—arguably the most recurring meme in his blog: "The important question is always: how big is the blast radius of this change?" Before touching code, he prioritizes understanding the scope of impact.

He despises two extremes: over-specify (micromanaging AI with rigid constraints that stifle creativity) and under-communicate (vague demands that force AI to guess). His sweet spot? "Under-specify is often better—the model will fill in the gaps in surprisingly good ways." Leaving some ambiguity lets AI surprise him with elegant solutions.

His secret weapon? Cross-project reuse. A simple instruction like "look at ../vibetunnel and do the same for Sparkle changelogs" leverages his AI-optimized codebase (clean structure, intuitive naming) for rapid "copy-paste-with-brains" scaling. He self-deprecates: "I do know where which components are and how things are structured—and that’s usually all that’s needed." No need to memorize details; just know the architecture, and let AI handle the rest.

Peter’s daily grind is a mirror of AI-agent-driven coding, laced with his signature casual banter—authentic and relatable:

docs/*.md; a global AGENTS.MD file lists prompts like “always read docs/XXX first” for quick AI reference; cross-project refs use raw folder paths—simple and effective.Peter’s technical choices come with his signature blend of pragmatism and light snark:

"Design the codebase to be AI-agent-friendly → Chat with it using minimal prompts + screenshots → Gauge blast radius before queueing for parallel inference → Sit back, watch the stream, curse occasionally → Evolve linearly on main → Cultivate intuition through massive interactions until software grows like weeds—or become a Claudoholic and rue the day."

At its core, this is the ultimate play of "maximize model inference time, minimize human cognitive load." But Peter never forgets to throw in a reality check (with self-deprecation): "Writing good software is still really hard. AI just moved the bar from ‘typing’ to ‘taste + architecture + direction’. And yes, I’m still the bottleneck—just a fancier one now."

After reading his blogs, I suddenly get it: The joy of coding in the AI era might be watching models sprint ahead while muttering, "No, not like that—like this!" followed by an impulsive "just one more prompt." That addictive yet efficient feeling? Only those who’ve truly coded with AI agents would understand.

Author: Lema